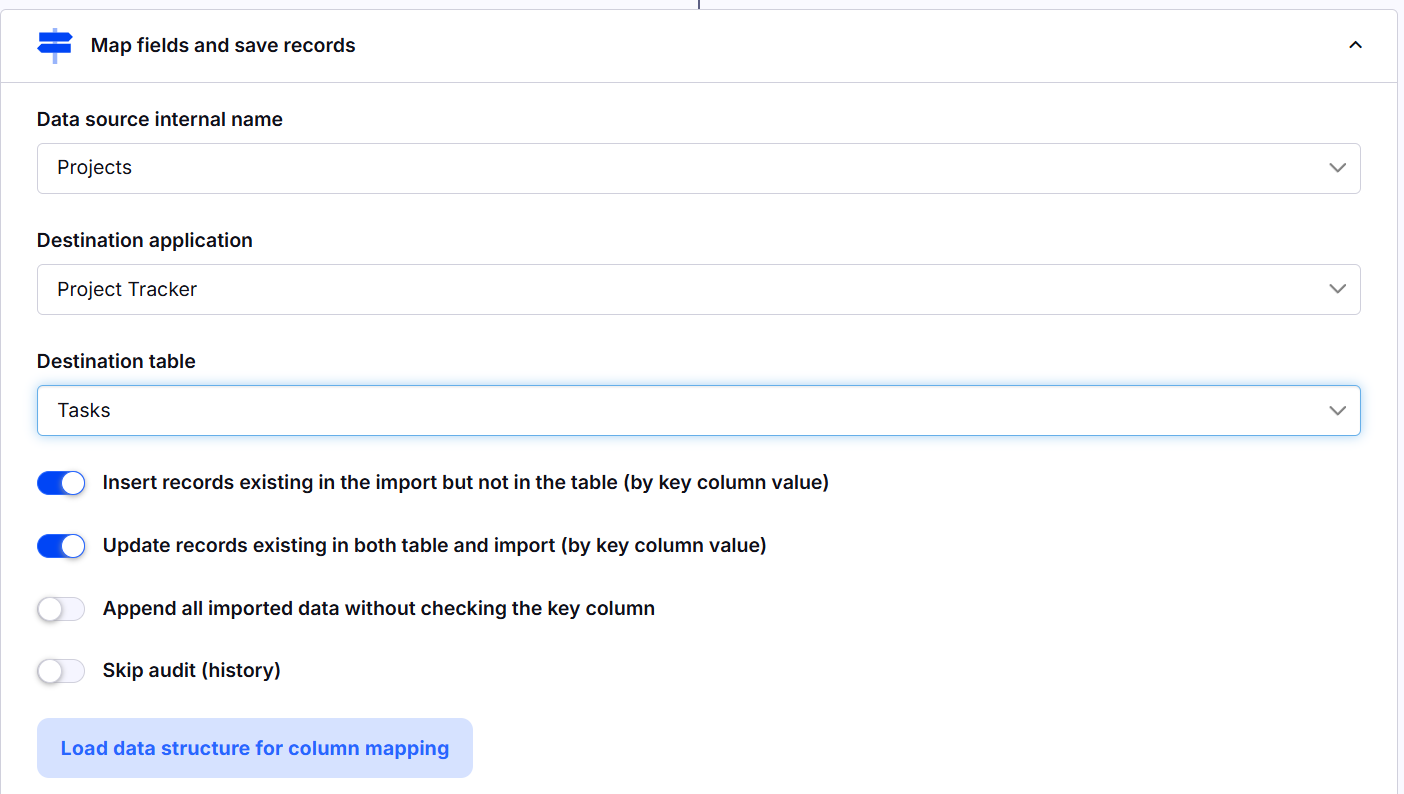

Map Fields and Save Records

Description

The Map Fields and Save Records workflow action processes imported data by mapping source columns to target fields and saving records into a designated table within a Tabidoo application. This action follows the Load External Data workflow or Changed records and utilizes the internal name of the data source defined earlier.

Configuration Options

Data Source Internal Name

The name of the data property containing the imported data structure from the Load External Data action.

or Variable doo.model

Destination Application

Specifies the target Tabidoo application where the records will be saved.

Ensure the user has access and sufficient permissions for this application.

Destination Table

Select the table within the destination application where the records will be stored.

The table must exist in the application and be accessible with the user's permissions.

Note: Ensure that the table's structure matches the data format being imported. For example, if the source data contains dates, the destination table should have fields with the correct date format.

Processing Options

There are multiple checkboxes for configuring how the imported records are handled:

Insert records existing in the import but not in the table (by key column value): Adds new records to the destination table if they do not already exist, based on the key column.

Update records existing in both table and import (by key column value): Modifies existing records in the destination table with the corresponding values from the import.

Append all imported data without checking the key column: Adds all imported data to the destination table without validating against the key column.

Skip audit (history): Prevents the creation of audit logs for this action, useful for optimizing performance. Caution: Skipping audit logs may result in missing records of changes for compliance or tracking purposes. Use this option only for bulk data loads when audit history is not necessary.

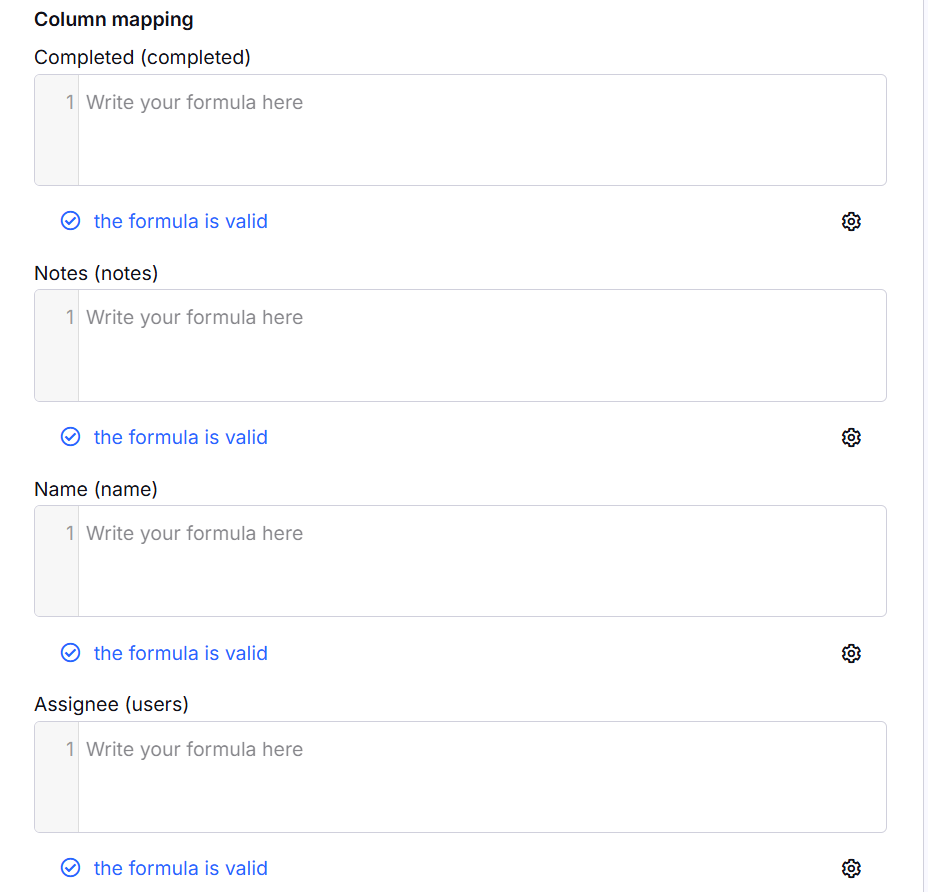

Column Mapping

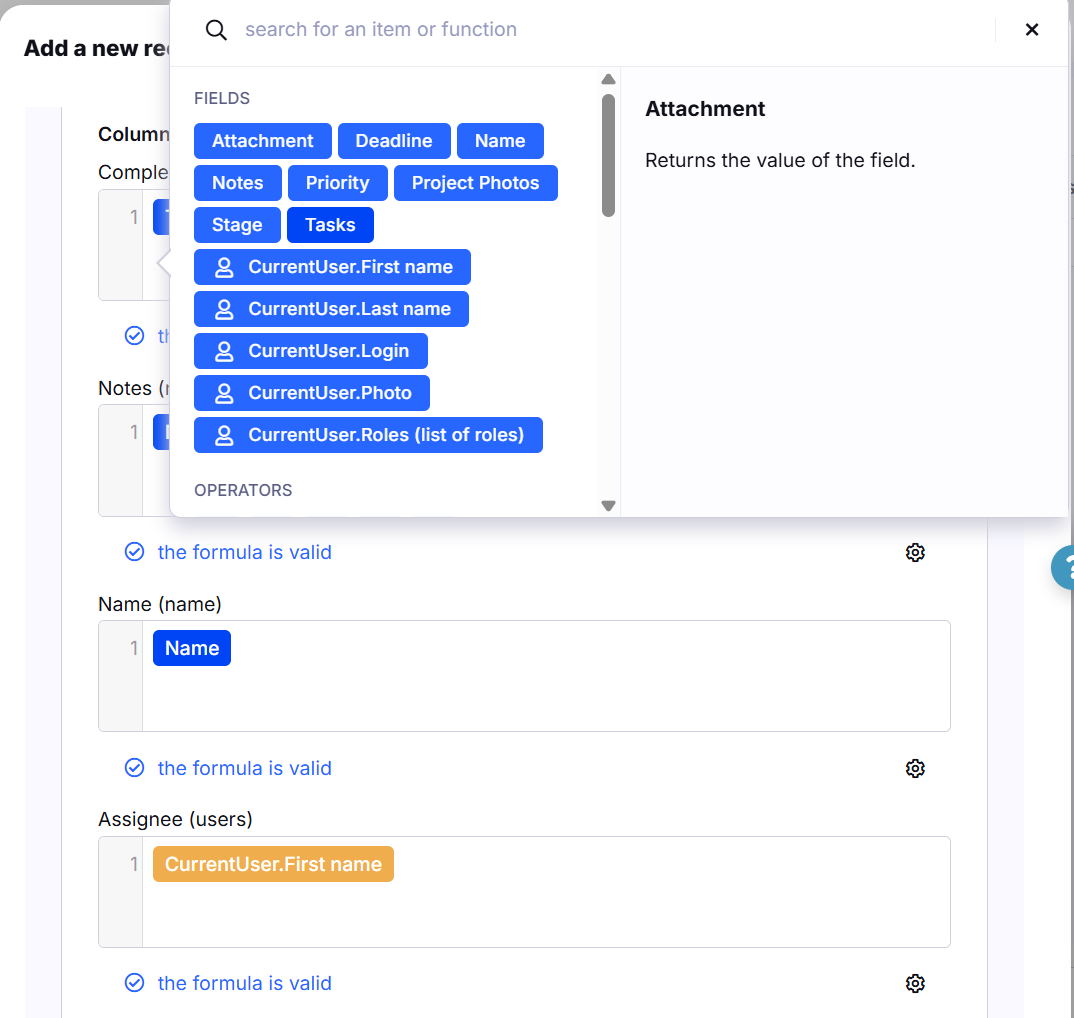

Automatic Data Structure Loading: When all configurations are set up correctly, the column mapping interface is automatically generated. This interface includes fields corresponding to the destination table and displays available fields from the data source, as well as simple operators and functions.

Functions: Text processing, mathematical operations, and date manipulation can be applied during mapping. Valid formulas are confirmed with a message indicating "the formula is valid" for each mapped field.

Mapping Interface

Displays the source columns from the data structure and the corresponding destination fields.

Users can manually adjust mappings to ensure accuracy.

Supports transforming data formats or applying specific rules during mapping.

Use Cases

Data Migration: Efficiently move records from external sources into Tabidoo tables.

Data Synchronization: Ensure existing records are updated and new records are added seamlessly.

ETL Processes: Transform and load external data into a structured format for reporting and analytics.

Audit Bypass: Skip logging for specific operations to enhance performance during bulk processing.

Dynamic Field Mapping: Apply calculations and transformations to data during field mapping.

Notes

Dynamic Field Mapping: Pay attention to the data format when mapping fields. For example, if the source data contains dates, ensure that the destination table has date-type fields. Use functions to transform or reformat data (e.g., converting text to numbers or formatting dates).

Table Compatibility: Ensure that the destination table's structure is compatible with the imported data format. For example, the destination table should have the correct field types (e.g., text, number, date) to match the incoming data.

Skip audit (history): Use this option sparingly. Skipping audit logs may be helpful for bulk imports but can cause issues when tracking changes or meeting compliance requirements.

Permissions: Verify that the user has the appropriate permissions for both the source and destination applications. Insufficient permissions may cause errors during the import process.

Performance Considerations: When importing large datasets, consider using the Skip audit (history) option to improve performance, but ensure that it doesn't affect your ability to track important changes.